Intuitive Joint Priors for Bayesian Multilevel Models

Regression models are ubiquitous in the quantitative sciences making up a big part of all statistical analysis performed on data. In the quantitative sciences, data often contains multilevel structure, for example, because of natural groupings of individuals or repeated measurement of the same individuals. Multilevel models (MLMs) are designed specifically to account for the nested structure in multilevel data and are a widely applied class of regression models.

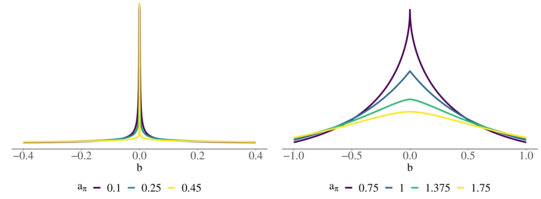

From a Bayesian perspective, the widespread success of MLMs can be explained by the fact that they impose joint priors over a set of parameters with shared hyper-parameters, rather than separate independent priors for each parameter. However, in almost all state-of-the-art approaches, different additive regression terms in MLMs, corresponding to different parameter sets, still receive mutually independent priors. As more and more terms are being added to the model while the number of observations remains constant, such models will overfit the data. This is highly problematic as it leads to unreliable or uninterpretable estimates, bad out-of-sample predictions, and inflated Type I error rates.

To solve these challenges, this project aims to develop, evaluate, implement, and apply intuitive joint priors for Bayesian MLMs. We hypothesize that our developed priors will enable the reliable and interpretable estimation of much more complex Bayesian MLMs than was previously possible.

Project Members: Javier Aguilar

Funders: TU Dortmund University, German Research Foundation (DFG)